As generative AI becomes more accessible, many organizations are asking the same question: How do we introduce it responsibly and effectively? Here’s how we approached that challenge over the past year.

Piloting Copilot: What Worked, What Didn’t

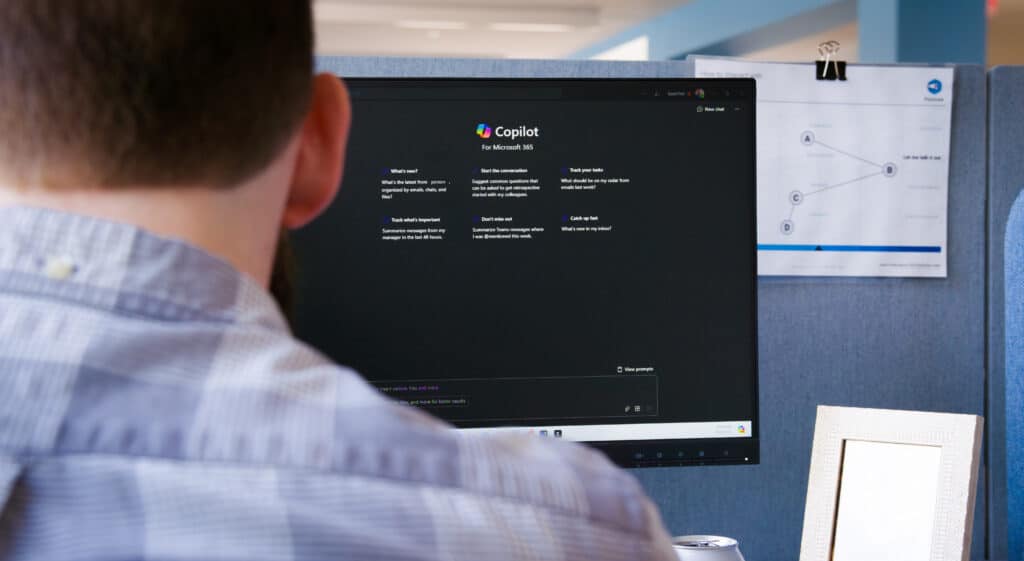

In 2024, we piloted Microsoft Copilot internally to explore how generative AI could enhance daily work. The pilot was productive—but we quickly learned that adoption isn’t just about giving people new tools. It’s about setting direction, establishing guardrails, and enabling responsible use through policy, training, and hands-on experimentation.

We introduced Copilot across Teams, Outlook, Word, and SharePoint. It made tasks like summarizing emails, drafting content, and surfacing documentation faster and easier. But usage varied. Some team members jumped in; others held back. Key questions emerged: What data is Copilot using? When should we trust its output? Where are the boundaries?

We realized access alone wasn’t enough—our teams needed clarity and confidence.

Setting Boundaries with Policy

To create that foundation, we created an internal AI use policy. It defined appropriate use cases, clarified when human oversight was needed, and guided how to handle sensitive data. We also covered when and how to disclose AI-generated content.

The goal wasn’t to restrict creativity—it was to build trust and accountability from the start.

Explore more in our post: AI for SMBs

Training with Context, Not Just Features

We followed up with a “Lunch & Learn” to introduce Microsoft 365 Copilot. We grounded the session in team feedback, shared real-world examples, and walked through everyday scenarios. This session kicked off a deeper learning path: foundational concepts, prompt design, practical use cases, and ethical considerations.

We backed this with self-serve resources—internal guides, decks, and walkthroughs—so everyone could learn at their own pace.

Our goal wasn’t to create instant experts. It was to build comfort, clarity, and consistency.

Experimenting with Structure and Intent

Our internal AI Focus Group continued to test tools across Microsoft 365 and beyond—including SharePoint Premium, Copilot Studio, Pages, and Notebooks. We evaluated external tools like Perplexity, Gemini, ChatGPT, and right now we are piloting Thread’s “Magic Agents.”

This experimentation had a dual purpose: to help our teams adopt AI with confidence—and to prepare us for smarter, more informed conversations with clients.

Every tool taught us something. Together, they reinforced a core insight: When curiosity leads, capability follows.

A Practical Approach to AI Adoption

For organizations exploring AI in 2025 and beyond, here’s what we recommend:

- Start with a readiness assessment—understand your people, processes, and data.

- Define boundaries and expectations before enabling new tools.

- Don’t just train on features—teach relevance and impact.

- Choose one focused use case to pilot, and document the results.

- Involve both decision-makers and everyday users from the start.

Whether you’re a team of five or fifty, a structured, inclusive approach helps AI adoption stick.

Explore more in our post: Building Ethical AI Practices

Looking Ahead

We’re still exploring new tools and capabilities—but now with a framework in place. We pilot with intent, train with purpose, measure outcomes, and only scale when the value is clear.

That shift—from “What can this do?” to “What should this do for us?”—has made all the difference.

If you’re interested in learning more about how GadellNet can support your Microsoft Copilot journey, contact us today.